10 Steps to Get your Site Indexed in Google

If it's not already, organic search traffic needs to be a priority for your digital marketing plan. More than half of traffic on the Internet comes from search engines (it could be as high as 60%). Organic search traffic is also hugely important in generating online sales. So of course you realize your SEO is a priority. But where to start? All SEO starts with getting your website found, crawled and indexed by search engine robots.

In this piece you'll learn about the technical and on page aspects of SEO and how you can use them to attract the attention of Google, Bing and other search engines.

There are three main steps for SEO success with Google, which are as follows:

a) Get your site crawled by Google bots.

b) Get your site indexed.

c) Get high search rankings.

In this article we are going to talk about the two important initial processes i.e. the crawling and indexing of web pages which leads to sites being shown in search results. Being seen by Google is important, as so far no other search engine has surpassed Google's high popularity and user-preference.

What is crawling?

Search engine crawling refers to bots browsing particular pages on the web. If you have a newly launched site, the Google bot will have to find (crawl) your site's web pages to know of its existence on the web. That said, the bot's job does not end with crawling. It must index the pages too.

What is indexing?

Once a bot has found a page by crawling it, it then has to add the page to the list of other crawled pages belonging to the same category. This process is known as indexing. In a book you will find that the content is systematically arranged by category, word, reference, etc. in the index. This makes it easier for readers to find exactly what they are looking for in the book. Similarly, search engines have an index of pages categorized in various ways. These pages are not the pages from your website exactly, but a screenshot of the pages as they were seen the last time they were crawled. These screenshots are the cached versions of the pages.

When a user enters a search query in Google search, Google quickly goes through these indexes to judge which pages are appropriate to return in results. With the help of complicated mathematical algorithms, Google is able to decide where in the search results each page should be returned. The accuracy of Google in returning appropriate pages to users' queries is what makes it such a huge search giant.

NOTE: The cached page returned may not be identical to the page that was recently changed on your website, however, when you add new content and provide easy accessibility to search engines they will crawl and index your pages over again in order to return the latest versions of your web pages in search results.

This all begs the question: How do I get my site indexed by Google? (Here the word "indexed" means crawled and indexed collectively.) There are many ways to get your website crawled and indexed by Google bots. See the steps below (which are in no

particular order):

1. Google Search Console Account

Get a Google Search Console Account and a Google Analytics account. Submit your site here. You can check Crawl Stats in Google Search Console to see how frequently Google is crawling your pages.

Google Search Console also allows you to see exactly how many pages have been indexed by Google.

2. Fetch as Google

Google Search Console provides the option to ask Google to crawl new pages or pages with updated content. This option is located under the Crawl section and is called Fetch as Google.

Type the URL path in the text box provided and click Fetch. Once the Fetch status updates to Successful, click Submit to Index. You can either submit individual URLs or URLs containing links to all the updated content. With the former you can submit up to 500 URL requests per week, with the latter you can make 10 requests per month.

3. XML Sitemaps

Sitemaps act as maps for the search bots, guiding them to your website's inner pages. You cannot afford to neglect this significant step toward getting your site indexed by Google. Create an XML sitemap and submit it to Google in your Google Search Console account.

4. Inbound Links

Search engine bots are more likely find and index your site when websites that are often crawled and indexed link to it. For this to work you need to build quality links to your site from other popular sites. You can learn more about obtaining quality links from the 10 Link Building Strategies blog post from

WooRank.

5. Crawl your website

In order to understand how search engines crawl your content, you should crawl your website. Woorank's Site Crawl does this for you, highlighting any issues that could be preventing search engines from accessing your pages while highlighting any optimizations that can be made to improve your SEO.

6. Clean Code

Make the Google bot's job of crawling and indexing your site easy by cleaning your site's backend and ensuring you have W3C compliant code. Also, never bloat your code. Ensure there is a good text to html ratio in your website

content.

7. Faster Site, Faster Indexing

Sites that are built to load quickly are also optimized for faster indexing by Google.

8. Good Internal Link Structure

Ensure that all pages of your website are interlinked with each other. Especially if your site's home page has been indexed make sure all the other pages are interconnected with it so they will be indexed too, but make sure there are not more than 200 links on any given page.

9. Good Navigation

Good navigation will contribute to the link structure discussed above. As important as navigation structure is for your users, it is equally important for the fast indexing of your site. Quick Tip: Use breadcrumb navigation.

10. Add Fresh Content

Add quality content to your site frequently. Content of value attracts the bots. Even if your site has only been indexed once, with the addition of more and more valuable content you urge the Google bot to index your site repeatedly. This valuable content is not limited to the visible content on the page but also the Meta data and other important SEO components on the website. Keep these SEO tips for Website Content in mind.

These are the basic things you need to do to facilitate faster crawling and indexing by Google bots, but there might be other issues keeping your site from being indexed. Knowing these potential problems will come handy if you find your site is not being indexed.

Other things to consider

- Server Issues: Sometimes it is not your website's fault that it is not getting indexed but the server's, that is, the server may not be allowing the Google bot to access your content. In this case, either the DNS delegation is clogging up the accessibility of your site or your server is under maintenance. Check for server issues if no pages have been indexed on your new site.

- De-indexed Domain: You may have bought a used domain and if so, this domain may have been de-indexed for unknown reasons (most probably a history of spam). In such cases, send a re-consideration request to Google.

- Robots.txt: It is imperative that you have a robots.txt file but you need to cross check it to see if there are any pages that have 'disallowed' Google bot access (more on this below). This is a major reason that some web pages do not get indexed.

- Meta Robots: The following Meta tag is used to ensure that a site is not indexed by search engines. If a particular web page is not getting indexed, check for the presence of this code.

- URL Parameters: Sometimes certain URL parameters can be restricted from indexing to avoid duplicate content. Be very careful when you use this feature (found in Google Search Console under Configuration), as it clearly states there that "Incorrectly configuring parameters can result in pages from your site being dropped from our index, so we don't recommend you use this tool unless necessary. Clean your URLs to avoid crawling errors.

- Check .htaccess File: The .htaccess file that is found in the root folder is generally used to fix crawling errors and redirects. An incorrect configuration of this file can lead to the formation of infinite loops, hindering the site from loading and being indexed.

- Other Errors: Check for broken links, 404 errors, and incorrect redirects on your pages that might be blocking the Google bot from crawling and indexing your site.

- You can use Google Search Console to find out the index status of your site. This tool is free and collects extensive data about the index status of your site on Google. Click the Health option in Google Search Console to check the Index Status graph, as shown below:

- meta name="robots" content="noindex, nofollow"

- You can use Google Search Console to find out the index status of your site. This tool is free and collects extensive data about the index status of your site on Google. Click the Health option in Google Search Console to check the Index Status graph, as shown in the screenshot below:

In case you want to check which URLs are not indexed, you can do so manually by downloading the SEOquake extension.

On Page SEO

The first step to getting found by search engines is to create your pages in a way that makes it easy for them. Start off by figuring out who your website is targeting and decide what keywords your audience are using in order to find you. This will determine which keywords you want to rank for. Best practice is to target longtail keywords as they account for the vast majority of search traffic, have less competition (making it easier to rank highly) and can indicate a searcher is in-market. They also have the added bonus of getting more clicks, having a higher click through rate (CTR), and more conversions.

There are quite a few free keyword research tools available out on the web.

Once you have your target keywords, use them to build an optimised foundation for your pages. Put your keywords into these on page elements:

- Title tag: Title tags are one of the most important on page factors search engines look at when deciding on the relevance of a page. Keywords in the title tags tell search engines what it will find on the page. Keep your title tags 60 characters or less and use your most important keyword at the beginning. A correctly used title tag looks like this:

- <title>Page Title</title>

- Meta description: Meta descriptions by themselves don't have much of an impact on the way search engines see your page. What they do influence is the way humans see your search snippet - the title, URL and description displayed in search results. A good meta description will get users to click on your site, increasing its CTR, which does have a major impact on your ranking. Keywords used in the descriptions appear in snippets in bold so, again, use yours here.

- Page Content: Obviously, you need to put your keywords in your page content. Don't stuff your content though, just use your keyword 3-5 times throughout the page. Incorporate some synonyms and latent semantic indexing (LSI) keywords as well.

- Add a blog: Aside from the more stereotypical content marketing SEO benefits, blogs are crawling and indexing powerhouses for your site. Sites that have blogs get an average of:

- 97% more indexed links

- 55% more visitors

- 434% more indexed pages

Adding and updating pages or content to your site encourages more frequent crawling by search engines.

Technical SEO

Robots.txt

After you've optimized your on page SEO factors for your target keywords, take on the technical aspects of getting Google to visit your page. Use a robots.txt file to help the search engine crawlers navigate your site. Very simply, a robots.txt file is a plain text file in the root directory of your website. It contains some code that dictates what user agents have access to what files. It usually looks something like this:

User-agent:* Disallow:

The first line, as you can probably guess, defines the user agent. In this case the * denotes all bots. Leaving the Disallow line blank gives bots access to the entire site. You can add multiple disallow lines to a single user-agent line, but you must make a separate disallow line for each URL. So if you want to block Googlebot from accessing multiple pages you need to add multiple disallows:

User-agent: Googlebot Disallow: /tmp/ Disallow: /junk/ Disallow: /private/

Do this for each bot you want to block from those pages. You can also use the robots.txt file to keep bots from trying to crawl certain file types like PowerPoints or PDFs:

User-agent:* Disallow: *.ppt$ Disallow: *.pdf$

To block all bots from your entire site, add a slash:

User-agent:* Disallow: /

It's good practice to block all robots from accessing your entire site while you are building or redesigning it. Restore access to crawlers when your site goes live or it can't be indexed. Also be sure that you haven't blocked access to Schema.org markup or it won't show up in Google's rich search results.

If you have a Google Search Console account, you can submit and test your file to the robots.txt Tester in the Crawl section.

XML Sitemaps

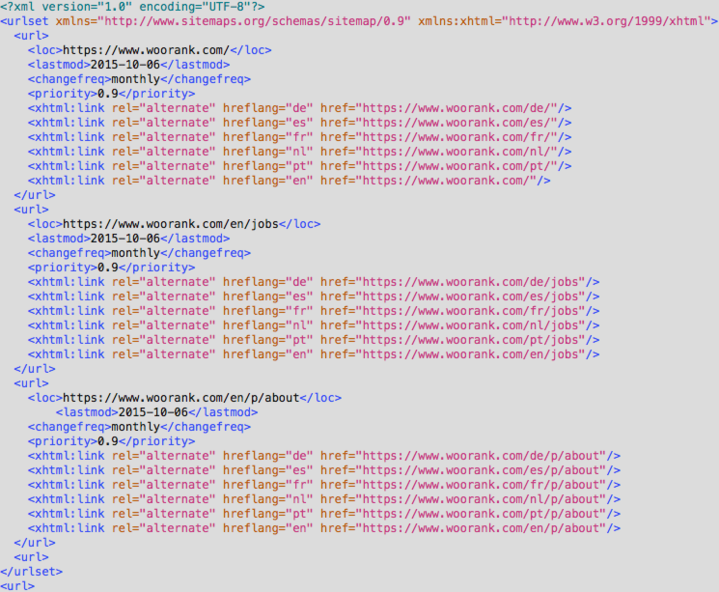

XML sitemaps are, like robots.txt files, text files stored in your site's directory. This file contains a list of all your site's URLs and a little extra information about each URL's importance, last update, update frequency and if there are other versions of the page in a different language. The sitemap encourages search engines to crawl your pages more efficiently. Sitemaps include the following elements:

- <urlset> - The opening and closing line of the sitemap. It's the current protocol standard.

- <url> - The parent tag for each URL in your site. Close it with

- <loc> - This is the absolute URL where the page is located. It's absolutely vital that you use absolute URLs consistently (http vs. https., wwww.example.com or example.com, etc.)

- <lastmod> - The date on which the page was last updated. Use YYYY-MM-DD format.

- <changefreq> - The frequency at which you make changes to the file. The more frequently you update a page the more often it will be crawled. Search engines can tell when you're lying, though, so if you don't change it as frequently as you set here, they will ignore it.

- <priority> - A page's importance within the site, ranging from 0.1 to 1.0.

- <xhtml:link> - This provides information about alternate versions of the page.

When properly implemented, your sitemap should look like this:

If you have a large or complicated site, or don't want to make a sitemap yourself, there are lots of tools out there who can help you generate your XML sitemap.

Sitemaps won't really help you rank better, at least not directly. But they help search engines find your site and all your URLs, and that makes it easier for you to climb the rankings. Speed this process up even more by submitting your sitemap directly through Google Search Console. Go to Sitemaps under Crawl, and click Add/Test Sitemap. You can do the same with Bing's Webmaster Tools. Use these tools to check for any errors in your sitemap that could impede the indexing of your site.

After you have submitted your sitemap to Google Search Console the tool will alert you of any Sitemap errors. Google has listed a few of these errors and an explanation on how to fix each of them here.

Off Page SEO

You can always take the direct route and submit your site's URL directly to the search engines. Submitting your site to Google is simple: visit their page, enter your URL, complete the Captcha to prove you're human and click Submit Request. You can also go through Search Console if you have an account. You can submit your site to Bing using their Webmaster Tools, which requires an account. Use crawl errors to find issues that might be blocking crawlers.

Make it easier for search engine spiders to find your site by getting your URL out there. Put a link to your website in your social media pages. This won't really help you rank better in search results, but Google does crawl and index social media pages, so it will still see your links. It's extra important that you have a Google+ account so you can use the rel="publisher" tag to get your company information to appear in Google's rich snippets. If you've got a YouTube account, post a short video explaining the features of your new site and add a link in the video description. If you're on Pinterest, pin a high-resolution screenshot with your URL and a description (remember to use your keywords in the description).

This last part of off-page SEO can be a bit tricky: Submitting your URL to a web directory. Web directories were once a common way for SEOs to generate easy backlinks for their sites. The issue, however, is that many of these sites had a lot of spam content and provided little value to users. So submitting your URL to a low quality directory could do more harm than good.

Do a little bit of homework on directories to find directories with high authority. Also check out trusted resources online for curated lists of credible directories.

In Conclusion

Getting found online is the end goal of your SEO. But before people can find you, the search engines need to first. You can always publish your site, sit back, relax and wait for them to come to you, but that won't get you the best results. Use the methods listed above to improve the crawling and indexing of your pages so you can start ranking faster and build your audience.

What steps have you taken to get your pages crawled and indexed by search engines? What on page and off-page challenges have you encountered?

Category: Technical SEO

Tags: Google Search Console, Search Engines, on page SEO, Off-page SEO

Title tag: How to Get Google to Index Your Site Fast

Meta description: If you want your website to rank, you need to get your site indexed. Follow these on page, technical and off-page optimizations to attract indexing bots.